Why does every choice come with an entropy tax?

I present a very general derivation that shows how every choice carries an unavoidable “entropy tax,” reflecting the hidden cost of shifting from old beliefs to new choices.

Cite as: Ortega, P.A. “Why does every choice come with a tax?”, Tech Note 3, DAIOS, 2024.

Imagine every choice you make —whether trivial or life-changing— comes with a hidden “tax”. This isn't a financial toll but an entropy tax, an inherent cost tied to the mental effort of shifting from what you initially believe (the prior) to what you choose after deliberation (the posterior).

This concept lies at the heart of information-theoretic bounded rationality, and the tax formula looks like this: \[ \text{Choice Tax} \propto \sum_x P(x|d) \log \frac{ P(x|d) }{ P(x) }, \] where $P(x)$ is the prior choice distribution, and $P(x|d)$ is the posterior choice distribution conditioned on deliberation $d$, and $x$ is a choice in $\mathcal{X}$.

Where does this come from?

Assumption 1: Temporal progress as conditioning

First we need to model temporal progress of any kind. We'll go with a “spacetime” representation that is standard in measure theory. This works as follows. We assume that we have a collection of all the possible realizations of a process of interest. This is our sample space $\Omega$. To make things simple, let's assume this set is finite (but potentially huge). We also place a probability distribution $P$ over all the realizations $\omega \in \Omega$.

Now, any event –be it a choice, an observation, a thought, etc.– is a subset of $\Omega$. Whenever an event $e \subset \Omega$ occurs, we condition our sample space by $e$. This means that we restrict our focus only on the elements $\omega \in e$ inside the event, and then renormalize our probabilities: \[ P(\omega | e) = \frac{ P(\omega) }{ P(e) }, \qquad P(e) = \sum_{\omega \in e} P(\omega). \]

For every additional event that occurs, we add new constraints to the conditioning event using a conjunction. Hence, if events $e_1, e_2, \ldots... , e_N$ happen, the final probability distribution over realizations will be \[ P(\omega | e_1, \ldots, e_N) := P(\omega | e_1 \cap \ldots \cap e_N) = \frac{ P(\omega) }{ P(e_1 \cap \ldots \cap e_N) }. \] This models a passage of time where novel events introduce irreversible changes to the state of the realization.

Assumption 2: Restrictions on the cost function

Next, we'll impose constraints on the cost function. We want our cost function to capture efforts that are structurally consistent with the underlying probability space. (Later, we'll see how to relax these assumptions without compromising these structural constraints.) The following requirements are natural:

- Continuity: The cost function should be a continuous function of the conditional probabilities. Formally, for every pair $a, b$ of events such that $b \subset a$, the conditional cost $C(b|a)$ of bringing about the event $b$ given the event $a$ is a continuous function of the conditional probability $P(b|a)$.

- Transitivity: If there is a sequence of three events, bringing about the last from the first costs as much as doing it in two steps. Formally, for every triplet of events $a, b, c$ such that $c \subset b \subset a$, the cost is additive: $C(c|a) = C(b|a) + C(c|b)$.

- Monotonicity: Events of higher probability are easier to bring about than those of lower probability. Formally, we have for every $a, b, c, d$ such that $b \subset a$ and $d \subset c$, $P(b|a) > P(d|c)$ iff $C(b|a) < C(d|c)$.

These requirements are essentially equivalent to Shannon's axioms for entropy restated in terms of events. As a result, we get that the only cost function that obeys these requirements is the information content: \[ C(a|b) = -\beta \log P(a|b), \] where $\beta > 0$ is factor that determines the units of the cost.

Modeling choices

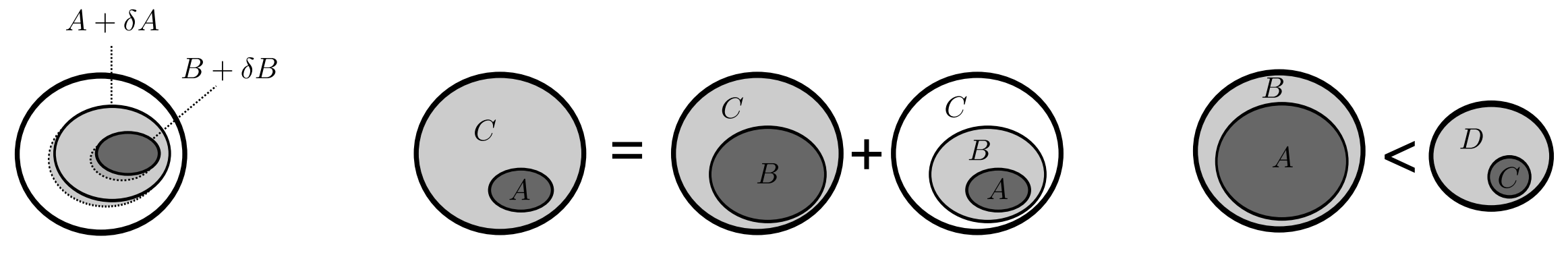

When choosing, decision-makers spend resources (thinking, obtaining data, etc.) that alter their choice probabilities in order to reflect their preferences:

How do we go about modeling this? Following the logic outlined above, “thinking” can be modeled as conditioning on an event $d$ in which the choice events have the desired relative sizes:

Notice how we start out from prior knowledge captured by the event $c$, and how conditioning on the “thought event” $d$ (where $d \subset c$) changed the relative sizes of the choice events $x_1, x_2, x_3$ in a way consistent with the previous picture.

Cost of deliberation

Now, based on our sketch above, let's calculate the cost of transforming the prior choice probabilities into posterior choice probabilities: \[ \begin{align} C(d|c) &= -\frac{1}{\beta} \log P(d|c)\\ &= -\frac{1}{\beta} \sum_x P(x|d) \log \biggl\{ P(d|c) \frac{ P(x|d)P(x|c) }{ P(x|d)P(x|c) } \biggr\} \\ &= -\frac{1}{\beta} \sum_x P(x|d)\log\frac{ P(x \cap d) }{ P(x \cap c) } + \frac{1}{\beta} \sum_x P(x|d) \log\frac{ P(x|d) }{ P(x|c) } \\ &= \underbrace{ \sum_x P(x|d) C(x \cap d|x \cap c) }_\text{expected cost} + \underbrace{ \frac{1}{\beta} \sum_x P(x|d) \log \frac{ P(x|d) }{ P(x|c) } }_\text{choice tax}, \end{align} \] where we've used the identities $P(a|b) = P(a \cap b)/P(b)$, $x = x \cap x$, and $d = d \cap c$.

We've obtained two expectation terms. The second is proportional to the Kullback-Leibler divergence between of the posterior to the prior choice probabilities. What is the first expectation?

The first expectation represents the expected cost of each individual choice (if each choice were to occur deterministically). This is because each term $C(x \cap d|x \cap c)$ measures the cost of transforming the relative probability of a specific choice.

Connecting to the free energy objective

We can transform the above equality into a variational principle by replacing the individual choice costs $C(x \cap d|x \cap c)$ with arbitrary numbers. The resulting expression is convex in the posterior choice probabilities $P(x|d)$, so we get a nice and clean objective function with a unique minimum.

We can even go a step further: noticing that the variational problem is translationally invariant in the costs, and multiplying the expression by $-1$, we can treat the resulting “negative costs plus a constant” as utilities, obtaining \[ \sum_x P(x|d) U(x) - \frac{1}{\beta} \sum_x P(x|d) \log \frac{ P(x|d) }{ P(x|c) }. \]

This last expression is colloquially known as the free energy objective in the RL literature (although strictly speaking, it is the negative free energy difference – but that's a topic for another post).

Conclusion

In this shown I've shown:

- how the free energy objective can be derived from simple assumptions and an appropriate interpretation of the resulting expression;

- and how the KL-divergence arises as an inevitable cost a decision maker has to pay in order to change their choice probabilities.

The free energy objective is invariant to translations of the utilities, i.e. if $U(x)$ is a utility function, then $U'(x) = U(x) + \alpha$, where $\alpha$ is a constant, leads to the same posterior choice probabilities. In this sense, utilities are essentially structurally equivalent to information costs.

Why does this matter?

The entropy tax is more than a mathematical curiosity—it’s a profound insight into decision-making:

- It’s unavoidable: Shifting your choices from prior assumptions always incurs a cost.

- It’s universal: This principle applies to decisions at all scales, from trivial daily choices to complex AI systems.

Moreover, utilities and information costs are interchangeable, revealing deep structural equivalences in decision theory.

Every choice you make has a hidden cost, an entropy tax that reflects the effort of deliberation and change. This principle emerges naturally from foundational assumptions about time, probabilities, and costs.

Next time you’re weighing options, remember: even the act of choosing comes with its own price.

References

- Our first derivation of the free energy difference is in “Information, Utility, and Bounded Rationality” by Ortega & Braun, Proceedings of the conference on artificial general intelligence, 2011.(AGI 2011).

- The derivation with probability measures comes from “Information-Theoretic Bounded Rationality” by Ortega et al., 2015. Arxiv1512.06789.